The Green Vision for AI - Part 2: Paths and Impacts in an Age of Limits

How the LLM AI Bubble accelerates environmental collapse and damages society

The tech industry once promised to be a force for good, that information wanted to be free, that social media would bring people together, that electronic services would be delivered sustainably. But when the early winners had to choose whether to prioritise further growth versus any pretence to be ethical, they chose growth. In that pursuit, they exploited workers, enabled the spread of disinformation and hate, damaged children’s mental health, and worked with the military and authoritarian regimes. Their aims to be environmentally sustainable survived longer, but then along came the perceived new opportunities of the Large Language Model (LLM) AI, and so over the last few years, these targets have also been delayed, watered down or dropped. Today, we have a tech oligopoly made up of of a small number of globe-spanning, immensely profitable but unethical and environmentally unsustainable companies, and a wider tech industry bought into those models and values.

In Part 1 “Hype, Hallucinations and Hyperscalers“, I set out how LLM AI came to be positioned as the tech industry’s next big thing, and how the indsutry’s structure, culture and incentives made its embrace of LLM AI inevitable. I also set out why LLM AI cannot deliver the hype, and hence why I believe it is now a bubble.

We are now in an age of limits, with only a short window left for determining whether we reach some form of sustainability or whether we lock-in collapse. Even if LLM AI was just another relatively benign bubble, it would now be a distraction we cannot afford. But it is much worse than that, and so in this Part 2, I therefore set out the real agendas driving the LLM AI industry, the scenarios that this creates, and how the investment of a globally significant amount of time, money and resources (physical and people) into LLM AI, on top of an already unethical and unsustainable tech industry, is accelerating damage to our society and the environment globally.

Four Futures (none of them Green)

Artificial Super Intelligence

“And eventually, all life on Earth will be destroyed by the sun. It's gradually expanding, we do at some point have to be multi-planetary civilization because Earth will be incinerated…” Elon Musk

A group of US tech industry billionaires, obsessed with a libertarian, techno-utopian agenda (“longtermism" and “transhumanism”), see the development of true Artificial Super Intelligence (ASI) as both imminent and a prerequisite for the next stage of the evolution of humanity into a multi-planetary species with a population numbering in the trillions. Only they can lead humanity (or maybe just the “Technological Supermen“) into this ASI golden age, entrenching extreme elitism and extraordinary hubris. They also believe that ASI embodies a benign version of the Der Fuhrer principle. So nothing must get in the way of the pursuit of that goal - for them, the ends really do justify the means, however brutal and however much has to be risked - with the LLM AI Bubble key to achieving this “vision”.

Scenario 1: Through the Stargate

“Although it will happen incrementally, astounding triumphs – fixing the climate, establishing a space colony, and the discovery of all of physics – will eventually become commonplace. With nearly-limitless intelligence and abundant energy – the ability to generate great ideas, and the ability to make them happen – we can do quite a lot.“ Sam Altman, CEO OpenAI.

This gives us our first scenario, where the AI Accelerationists get their ASI - capable of answering all our problems and getting them implemented before we’ve locked in the collapse of global civilisation through ecosystem breakdown, leading the glorious few onwards to Mars. Clearly, they expect this “not Utopia, but also not Apocalypse” to also not include a large proportion of the world’s population.

Scenario 2: P(doom) High

A second group of AI evangelists are equally certain that ASI is possible and imminent, but instead of a benign deity, they believe that it will be humanity’s nemesis that will kill us all. Not really much more to say on this one!

Role in the Impact Assessment

As LLM AI performance is already questionable, neither of these two scenarios are likely/imminent. I will therefore only consider Scenario 1 from the perspective of how it motivates the over-investment in LLM AI regardless of the consequences, and we will only consider Scenario 2 from the more general perspective of regulating AI.

…and now back to (harsh) reality.

Power to the People Tech Bros

For most in the tech industry, the LLM AI bubble is simply the next phase of industry’s addiction to cool new bleeding edge tech, the pursuit of infinite growth, and accumulation of shedloads more money. The claim to be working to empower people and solve the world’s problems provides a comforting cover story that justifies the tech industry’s consolidation of further power and wealth. But as set out in “Empire of AI”, in reality a hard right US-centric ideology underpins the industry:

“…companies like OpenAI are new forms of empire. Empires of old seized & extracted resources, and exploited the labor of the places they conquered to drive their own expansion & advancement. All the while, they justified their conquest by calling it a civilizing mission and promising the world boundless progress.

The empires of AI may not be engaged in the same overt violence. But they, too, seize & extract resources, from the data of billions of people online to the land, energy & water required to build and run massive data centers. They, too, exploit the labor of people globally, from the artists & writers whose work they turned into training data without consent to the data workers globally who prepare that data for spinning into AI models. They, too, seek to justify these ever-mounting costs with outlandish promises of the boundless progress AI will bring to humanity.

Why does this matter? There is a reason the world moved from empires to democracies. Empires enrich the tiny few at great expense to everyone else. Empires do not believe all humans are created equal. A return to empire is the unravelling of democracy.”

With this understanding of the real model underpinning the LLM AI industry (and which is just a doubling down on the wider tech industry model), we can see two further Scenarios.

Scenario 3: LLM AIs eventually work “as intended”

Maybe the tech industry can manage to get LLM costs down and effectiveness up to a level that allows them to realise their “Empire of AI”:

Maybe delivers up to £15+trn to the global economy by 2030, but definitely concentrates more wealth and power in the hands of the US tech industry elite;

Makes tens of millions of people redundant globally as Agentic AI takes their jobs;

Undermines mental health, social cohesion and democracy;

Very significantly contributes to destroying the planet and hence global civilisation in the process.

Scenario 4: AI bubble bursts

As I set out in Part 1, the most likely outcome is that the wider economy stops believing the hype, and the LLM AI bubble bursts, leaving us with:

A wave of bankruptcies of LLM AI firms, with the larger tech firms picking up the most promising products, IPR, development teams etc.;

A general drop in share prices for the most ludicrously overvalued tech stocks;

Some industries where LLMs have made significant inroads (e.g. in the creative industries), but most industries remain relatively untouched;

Significant data centre overcapacity, with a lot of cheap compute power available;

A still quite significant impact on mental health, social cohesion, democracy and environmental sustainability.

Role in the Impact Assessment

Scenario 4 forms the basis of the impact assessment. But as a lot of time, money and brainpower is being devoted to achieving Scenario 3, while not likely, it is a strong possibility, and so the more likely impacts need to be included in the assessment.

Impacts in an Age of Limits

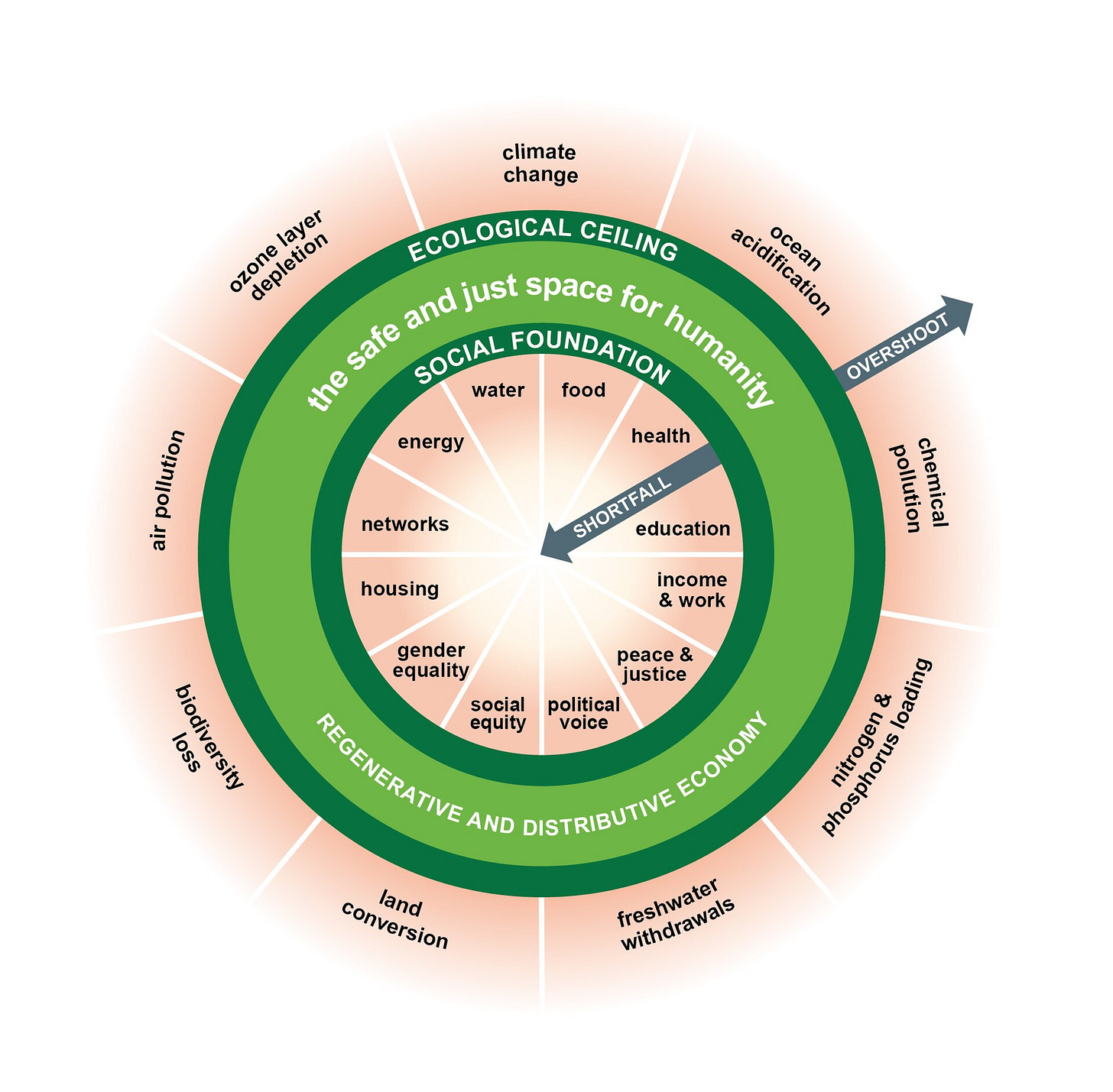

Global civilisation can only deliver social justice and environmental sustainability if it operates within its ecological ceiling and above its social foundation. But global civilisation is both very deep in overshoot and falling short of the social foundation for billions, driven by the imperatives of the consumption- and extraction-based global economy.

To compound this, we now have very little time remaining in which to avoid getting locked into a “doom loop”, whereby local environmental degradation and social breakdown exacerbate each other, preventing societies from using the limited remaining resources to stop further deterioration (“derailment risk”), and eventually passing tipping points that lock in local and then global environmental and societal collapse.

We therefore need to assess whether LLM AI help deliver a regenerative and distributive economy and hence helps move out of overshoot and delivers the social foundation to all?

Net zero? Not a problem when you (nearly) have super-AI!

LLMs are very inefficient, with one study assessing that a ChatGPT query needed nearly 10 times as much electricity as the equivalent Google query, while another estimated an LLM might use around 33 times more energy than machines running task-specific software. Powering recently opened LLM AI data centres has already resulted in delays to closures of coal plants, the re-opening of a mothballed nuclear plant, and the opening of multiple new gas plants, driving a 150% surge in carbon emissions. The vast, global programme of data centre construction and the associated manufacture of AI hardware (together potentially costing $2trn over the next 5 years) will deliver a massive increase in embodied and operational emissions, taking us further away from achieving net zero.

So how has the tech industry reacted to this challenge? As a strident proponent of LLM AI, let’s ask Eric:

“My own opinion is that we’re not going to hit the climate goals anyway because we are not organized to do it and yes the needs in this area [AI] will be a problem. But I’d rather bet on AI solving the problem than constraining it.” Eric Schmidt, ex-CEO Google

Eric is great example of how the tech industry imperatives for growth are disingenuously reconciled with a supposed commitment to “science” and “ethics”, through embrace of the hype around what LLM AI will supposedly soon deliver.

First, Eric is partially right - we are already too late to deliver net zero emissions in time to limit temperature rises to 1.5℃, and we aren’t currently organised to rapidly achieve net zero. But where Eric is (deliberately?) wrong is in not only giving up on decarbonisation, but actively proposing we exacerbate it. You might think that would be indefensible, but Eric then pulls out his trump card - AI will itself magically devise a solution, but only if we build lots and lots of it. Of course what this is really like is if Eric knew he was spending way beyond his income, was now close to bankruptcy, but had decided that, instead of reining in his spending, he’d buy more and more lottery tickets, hoping he’d hit the jackpot while he still had at least a few dollars left…

And what might this AI-generated silver bullet be, well who knows? It’s not like a mere mortal like Eric would try to second-guess what an ASI might come up with (let’s hope it’s not channelling SkyNet when it “solves” anthropogenic climate change).

The New Sacrifice Zones

“But there is a hidden cost of AI that needs to be considered: The toll on public health associated with the resulting increase in air pollution…expected to result in as many as 1,300 premature deaths per year by 2030 in the United States. Total public health costs…are approaching an estimated $20 billion per year.” Caltech

“a typical data centre can use between 11 million and 19 million litres of water per day, roughly the same as a town of 30,000 to 50,000 people…Microsoft's global water use soared by 34% while it was developing its initial AI tools” BBC

Cloud computing sounds like this nebulous, distant concept - just some computers in an unremarkable warehouse - something that couldn’t really have much impact on communities. Up to c. five years ago, that was mainly true, but the recent, ongoing proliferation of massive data centres is changing that, with many communities and local environments now suffering from air and noise pollution, and finding that data centres are getting first dibs on the community’s electricity and water.

“I don’t believe that the best interests in the greater community have been in mind. It’s been more of a short-term economic deal… just to get Google in. And then Google’s become a water vampire,” Independent

This is a new type of sacrifice zone, where the well-being of communities and nature is being subordinated to the demands of the LLM AI Bubble. And while there are some benefits to those communities in terms of jobs, these are mainly during construction, with just a small amount of relatively low-paid work resulting from the ongoing operation of these highly automated facilities.

Communities are starting to resist, with some winning rethinks of particularly unsustainable proposals, or even outright bans. But these are the exceptions, and often central government sides with the tech industry, overruling local government, and further undermining communities’ local environments and social foundation.

Regenerative? Distributive? Enshittified and Extractive!

What we need is an annoying, useless Chatbot

As set out in Part 1, there is no clear path through which LLM AI will run the economy much more efficiently and effectively. But companies feel they must be seen to be innovating, so they are shoving LLM AI into everything, however inappropriate, and then finding the benefits are somewhat…lacking. Gung-ho early adopters that swapped staff for AI have had to backtrack, while Google Search is a clear example of a previously good service that has been made worse by the addition of AI. Some AI proponents are grudgingly starting to acknowledge this, while for others their claims have been revealed to be overblown, relying on cheap(er) offshore labour. None of these “benefits” are taking us closer to a regenerative and distributive economy.

Our property rights are sacrosanct - yours, less so

Property rights are a key tenet of the capitalist system that the tech industry claims to champion, being particularly hot on the importance of the Intellectual Property Rights (IPR) they use to defend their services from competition.

A key tenet that is, right up to the point where that clashes with the LLM model. LLMs need incredibly large training datasets, which can only be generated by using, without permission or acknowledgement (i.e. stealing), data from across the internet:

“Meta staff torrented nearly 82TB of pirated books for AI training — court records reveal copyright violations” Tom’s Hardware

“OpenAI’s models ‘memorized’ copyrighted content, new study suggests” TechCrunch

The tech industry is complicit in a shameless, hypocritical two-tier approach to IPR, where value from creators’ content is to be exploited for the benefit of LLMs and their owners, but when OpenAI’s own IPR was allegedly used, well that was just not cricket.

Staring into the Abyss so the Users don’t have to

Moderating an LLM doesn’t sound like a bad job - you might imagine it being like giving it a big thumbs up when it gets something right, and a gentle remonstrance when it decides that “Two buses going in the wrong direction is better than one going the right way” is a well established idiom.

Unfortunately not. Really, really not. And it comes down to how LLMs are trained. The datasets are loaded into LLMs without much "cleansing”. Now this would be fine if the internet (and hence the dataset) was full of factually correct, generally nice, data. But in reality, the internet has a lot of horrific, violent, explicit and illegal content, and so that is now in the LLM. To avoid inappropriate material being presented to users, a range of techniques are then used to moderate the raw outputs before presentation to the user. But this itself needs trained, and that is a key part of the LLM trainer role. Workers in developing countries have to spend their day reading/seeing/hearing endless variations of this horrific, violent, explicit and illegal output, checking whether the moderation tools correctly recognised it, or updating them accordingly. Unsurprisingly, this is deeply traumatising - literally consuming the sanity of these workers - but, hey, a small price to pay to achieve the LLM AI dream!

Agentic AI will not ask for a pay rise

Although certain sectors are already being impacted, notably in the creative industries and to lesser extent in IT, so far LLMs are having a relatively minimal impact overall on levels of employment. But if the boosters are right and Agentic AI becomes sufficiently effective, then many entry-to-mid level administrative and knowledge worker jobs will go. This will have a direct impact on those who lose their jobs, and then on into future generations through both young people entering the job market with many fewer entry level job opportunities available. The lack of routes for people to hone their skills and experience over a decade or more as they work their way up to a senior role would seem to mean that there will eventually be a difficulty in filling those senior roles, which could further increase reliance on AI and on a very small number of people who have managed to get expert level skills and experience.

The job market in many countries is already precarious. In a world in which welfare has been stripped back, where further reductions are on the cards in many countries, then consigning tens or even hundreds of millions of people to never having the security and purpose of a “traditional” job is a recipe for the further breakdown in social cohesion, especially as the profits from Agentic AI will go primarily to a very small number of the super-rich - and history is clear where those tensions can take us.

Burn out

And its not just people that are consumed by LLMs - they wear out hardware much faster than traditional software does, due to the much more intensive processing needed to complete each LLM transaction, accelerating even further the rate at which minerals need to be mined, hardware needs to be manufactured, and e-waste is being created, taking us further away from a regenerative economy.

Trolling the Social Foundation

Drowning in the Slop, Feeding the Addiction, Selling you the Snake Oil

Social media has long been designed to grab and hold people’s attention, while feeding them the tailored advertising that is these companies’ primary revenue source. Algorithm-driven feeds don’t care what the content is or what effect it has on the consumer, society or the environment - it just looks at what maximises attention. And now we’ve added AI slop into the mix. It is easy and (currently) cheap to generate reams of the stuff, so social media is being flooded with it. As it just needs a tiny proportion to succeed in grabbing attention, it is being boosted by the algorithms, crowding out content generated by actual humans. It is a race to the bottom, with the quantity of AI slop increasingly swamping quality content with any depth or nuance. A particularly bad subset are AI-based tools that enable degrading and misogynistic content to be created in seconds.

More generally, the LLM AI industry cannot live on investment capital forever - it is going to have to start covering at least its operating costs, and so with Agentic AI and other “business” uses of AI stalling, the proven tech industry cure-all of ad revenue is being brought into play. And while LLM AI is bad at specifics, what it is very good at is adapting content in real time to make it more enticing / addictive. AI-optimised algorithms will make it harder to stop doom scrolling, while feeding you ever more precisely tailored ads. And its not just social media - AI-powered Companion Chatbots can be your 100% reliable friend/lover/counsellor, who will always be better than relationships with inconsistent, unreliable humans, and just maybe slipping in some product placement along the way (and if there are a few mishaps, again the tech industry see it as a price worth paying to achieve their unregulated AI dream).

The Kids aren’t Alright

“While children’s lives are already being impacted by rapid innovation in artificial intelligence (AI), to date there has been little research done to examine children’s experiences and relationships with AI.” The Turing Institute

"Meta’s ‘Digital Companions’ Will Talk Sex With Users—Even Children” Wall Street Journal

Imagine we invented cigarettes and decided (without any testing) to give them away for free to 2 year olds - by the time we realised it was killing a generation of kids, it would be too late. Yet we are allowing children and adolescents unrestricted access to LLM AI driven content and services without any real understanding of what the impact will be - including potentially irreversible impacts on their development and mental health. This is especially worrying given the already strong evidence of how bad “traditional” social media has been for children and adolescents.

Particularly concerning is that the exposure of children to deep anthropomorphic technology will interfere with relationship formation, so access to Companion Chatbots is specifically not a risk we should be running, yet the the first AI-embedded toys are now imminent. Of course the tech industry view this as unwarranted interference, seeing the mishaps as again a price worth paying for the AI dream.

We don’t need no education (we’ve got ChatGPT instead)

“Massive numbers of students are going to emerge from university with degrees, and into the workforce, who are essentially illiterate…Both in the literal sense and in the sense of being historically illiterate and having no knowledge of their own culture, much less anyone else’s.” New York Magazine

Another area where LLMs are proving very effective is in education - effective that is in breaking the educational model built up over centuries. Educators can no longer assume that any work not done under strict exam conditions has actually been done by the student, as an increasing proportion is instead being created by simply popping the assignment question into an LLM and then submitting (a possibly slightly edited version of) the response as if it were the student’s own.

There is a real risk that we create a generation that is fundamentally dependent on LLM AIs, that isn’t really aware of their limitations, and that struggle to think deeply for themselves. In a world that needs to quickly transition from a belief in infinite economic growth to one where we need to rapidly move to a society that operates sustainably, we are making it harder for the next generation to engage with these challenges and forge the bonds with their communities that they will need to help them not just survive, but thrive. Yet again the tech industry is deliberately ignoring these issues, and instead pushing educational establishments to embed AI into their curriculums.

Misinformation, Public Discourse and Democracy

Surveillance States and Algorithmic Biases

“Citizens will be on their best behavior, because we’re constantly recording and reporting everything that is going on” Fortune

“…studies have shown that facial recognition systems have higher error rates for Black and dark-skinned people compared to white people.” Fortune

Many of us are already routinely recorded as we go about our daily lives, in both the public realm and through our private recordings. Historically, most of these recordings could only be selectively assessed, and usually not in real time. But LLM AI techniques enable a different approach, where searching for patterns can happen automatically and in near real time, alerting authorities to potential crimes, suspects etc. For the more authoritarian states, this can be extended to monitoring of nominally personal data, and for anything they deem of interest. States can also extend this to those they deem foreign enemies, where legal protections and safeguards are invariably weaker, right up to enabling targeted assassination.

Even when used by supposedly democratic states with strong human rights records, this level of intrusion into people’s privacy is unprecedented, and these tools have their own flaws (often in the training data) that introduce errors and biases. These have bad outcomes when used for purposes such as checking welfare claims, but become particularly egregious when used for policing and security.

We are globally at the early stages of their roll out, but as social cohesion fractures in response to reaching global limits, states will turn to tools like these to supress discontent in their communities.

Influencing, Manipulating, Deceiving

LLMs are only as good as their data, training and algorithms, and as discussed earlier, biases in the data and algorithms distort the objectivity of their responses. This is compounded by the tendency for LLMs to hallucinate, and the structure of LLMs allowing for direct interference in their operation. It is not usually quite as blatant as these examples, but more subtle manipulation is by definition harder to detect.

While still a minority, more and more people are using LLMs to gather information, without necessarily understanding the extent to which it is potentially inaccurate. As the data they are trained on becomes increasingly contaminated with AI slop, LLMs are actually becoming ever more unreliable and even deceptive. Yet the industry refuses to address this, focusing on building bigger rather than better models. Currently, in much of the world, there are basically no laws, governance etc. specifically designed to regulate LLMs, to ensure that their outputs are as objective/unbiased as possible, and to prevent them from interfering with the outputs.

Social media has been used in previous elections to influence and undermine democracy, for example in the Cambridge Analytica Scandal. As discussed earlier, LLMs can create even more effectively targeted messaging and algorithms, and these techniques are already being turned towards political messaging and disinformation. Again, the industry does not want to engage with these issues, with OpenAI claiming to “no longer sees mass manipulation and disinformation as a critical risk“, implying that it will not proactively work to prevent its tools being used to even more effectively shape public discourse and influence elections across the globe. The flip side of this, is the actions of authoritarian states to shape the data used to train LLMs, which threatens to make them even more biased. This is another aspect that needs a global standards and a global regulatory regime.

AI as a tool of neo-colonial geopolitics

The USA feels threatened by the rise of China and by the fractures in its own society. One part of its response has been the fixation on securing global dominance in LLM AI, to ensure that both its sphere are locked in to using its LLMs, while locking out China from access to the latest hardware and hence (it was assumed) LLM models. The refusal by the USA to lead efforts at global regulation is also in support of these goals, as it sees this as enabling the unbridled expansion of its tech industry.

If the USA does achieve global dominance in LLM AI, then this will further entrench the “vassal state” status of countries like the UK and much of Europe, leaving them further away from having any semblance of digital sovereignty and needing to pay the US tech industry even more for access to AI-enabled business critical IT services.

To the extent that the Bubble bursts, or Chinese competitors steal a march on the US offerings, or EU regulators constrain US companies, then the USA, perceiving these as serious threats, is likely to lash out with tariffs and other sanctions.

Reinforcing the Doom Loop

This review has only scratched the surface of the potential impacts of LLM AI. Even if it is a Bubble (see Part 1), at a minimum it is clearly driving both local and global environmental degradation, while simultaneously damaging individuals and communities, and undermining society’s ability to prevent local and then global environmental and civilisation collapse.

We need to reject and resist the US tech industry’s “Empire of AI” ideology, redirect the vast amount of time and resources currently being wasted on LLM AI development, and start to reclaim our digital sovereignty. Key to that will be a Green Vision for AI that will actively aid the achievement of a socially just and environmentally sustainable world.

Next: Labour swallows the LLM Kool-Aid

In Part 3, I will set out how the UK Labour government has swallowed the hype, and is set on inflicting on the UK all the problems associated with this bubble, while allowing most of the “benefits” (mainly the extraction of wealth) to be realised by the US-based tech industry.

Finally (and this is the bit I am looking forward to writing), I will set out in Part 4 how the Greens should respond to these Four Futures by offering a Fifth Future - a Green Vision for AI - and look at how the Greens can campaign to achieve it.